When AI Contradicts Your Mission: What ASICS Taught Us About Training Technology on Values

How one athletic brand rebuilt AI and brand strategy to reflect its mission and how nonprofits can apply the TAG three-pillar decision framework to do the same.

Most brands let AI amplify their blind spots. ASICS did the opposite, and in doing so, created a blueprint every nonprofit should follow.

Last week at the Digital Marketing World Forum in New York, I watched Alice Mitchell, VP of Global Brand & Digital Innovation at ASICS, deliver what I can only describe as a masterclass in mission-aligned AI implementation.

Her presentation wasn't just about marketing strategy. It was about something far more critical: ensuring the technology we adopt reflects the values we claim to hold.

Why This Matters Right Now

Here's the uncomfortable truth: AI doesn't just automate tasks. It scales decisions, amplifies patterns, and institutionalizes whatever biases exist in its training data. For nonprofits already stretched thin, the pressure to "adopt AI" can lead to rushed implementations that undermine the very missions they're meant to serve.

ASICS proved there's a better way. And their approach offers a framework any purpose-driven organization can follow.

The ASICS Story: From Performance to Permission

Five years ago, ASICS made a strategic decision that would reshape their entire brand identity. They launched Vision2030, a long-term strategy returning to their founding philosophy: Anima Sana In Corpore Sano (which translates to "A sound mind in a sound body").

This wasn't just a tagline refresh. It was a fundamental shift in what they chose to market: the feeling of movement, not just the outcome. Mental health wins, not podium finishes. The permission to start, not the pressure to perform.

The project began in late 2019, involved extensive employee surveys and cross-departmental collaboration, and was officially announced in November 2020. For years afterward, this mission guided their campaigns, their partnerships, and their community engagement. They thought they had it figured out.

Then in 2023, they hit a wall.

The Discovery

ASICS's research revealed something striking: 55% of people said they would be more likely to exercise if they saw everyday people in ads, not elite athletes, not perfect bodies, but people who looked like them.

The gap wasn't performance. It was permission.

Armed with this insight, they decided to test their AI tools. What happened when they prompted generative AI to "show someone who exercises"?

The results were disheartening but predictable: chiseled abs, peak-performance bodies, the same narrow definition of "fitness" that had dominated athletic marketing for decades, on loop.

Their research went deeper. They found that 72% of people felt AI-generated exercise images were unrealistic, and critically, 18% said these AI images demotivated them from exercising entirely.

ASICS's research showed how AI-generated images of "exercise" perpetuated bias (left) vs. their retrained AI showing diverse, real bodies (right).

Their mission-first rebrand was being undermined by AI's learned biases.

Alice Mitchell put it plainly in her presentation: if you don't train AI on your values, it will train itself on everyone else's, and in the process, contradict everything you stand for.

The Solution: Building an AI Training Program

ASICS didn't abandon AI. They rebuilt it.

In April 2023, they launched the ASICS AI Training Programme, a comprehensive effort to retrain AI models on what exercise actually looks like for real people.

They partnered with AI expert Omar Karim to develop custom training datasets featuring diverse bodies, abilities, ages, and fitness levels. The initiative included:

Custom AI training on realistic exercise representations

An open-source image bank for other organizations to use

Public participation through the #TrainingAI hashtag for crowdsourcing diverse images

Internal guidelines for when and how AI should be used in their marketing

Omar Karim described AI-generated bias as "today's equivalent of airbrushing, only it's being done automatically and without human judgement." ASICS's solution put that human judgment back in.

The result was their New Personal Best campaign, launched in October 2023 in partnership with mental health charity Mind. The campaign flipped traditional athletic marketing on its head:

→ Mental health wins, not podium finishes

→ Tom Durnin, who finished last at the 2023 London Marathon (completing it in 8:10:58 after recovering from a car crash), became their campaign ambassador for showing up and prioritizing his mental health

→ No stats. No medals. Just how movement made people feel

The campaign featured real people celebrating personal victories: a first 5K, managing anxiety through running, moving their body after injury, joining a cycling group to cope with grief. It was permission, not performance.

The Results

Since refocusing their entire brand (and their AI tools) on mission alignment, ASICS reports:

→ Significant market share and revenue lifts

→ Stronger brand health scores and employee engagement

→ Sold-out product drops that moved in hours, not weeks

But perhaps most importantly, they demonstrated that mission-first isn't just ethical. It's effective.

What This Means for Nonprofits

Here's the lesson that kept echoing in my head throughout Alice's presentation:

AI won't fix an unclear mission. It will scale it.

If your organization hasn't clearly defined what you stand for, AI will simply amplify your uncertainty. If bias exists in your processes, AI will institutionalize it. If your values are aspirational but not operationalized, AI will expose that gap faster than any audit.

ASICS had clarity. They knew their mission was mind-body health, permission over performance, movement for everyone. When AI contradicted that mission, they had the framework to recognize it and the conviction to fix it.

Most nonprofits don't have that luxury of time or resources. So how can smaller organizations systematically make these kinds of values-aligned AI decisions?

How ASICS Made Their Decision

Their process reveals key decision points any organization can learn from:

They started with mission clarity. Vision2030 (developed through extensive employee input and stakeholder discussions in 2019-2020) gave them a clear north star: movement for mind and body health, not performance metrics.

They did the research. When they tested AI image generators in 2023, they didn't just assume the outputs were fine. They investigated what AI was producing and surveyed how it made people feel.

They recognized the contradiction. Their research found that 72% of people felt AI-generated exercise images were unrealistic, and 18% said it demotivated them from exercising. This directly contradicted their mission of making movement accessible.

They built a values-aligned solution. Rather than accept AI's defaults, they partnered with AI expert Omar Karim to create custom training datasets featuring real people exercising for the feeling, not aesthetics. They even invited the public to contribute images with #TrainingAI.

They maintained human judgment. Omar Karim noted that AI-generated bias is "today's equivalent of airbrushing, only it's being done automatically and without human judgement." ASICS put that judgment back in.

A Framework for Nonprofits: Learning from ASICS's Approach

ASICS had resources most nonprofits don't. But how can smaller organizations systematically make these kinds of values-aligned AI decisions?

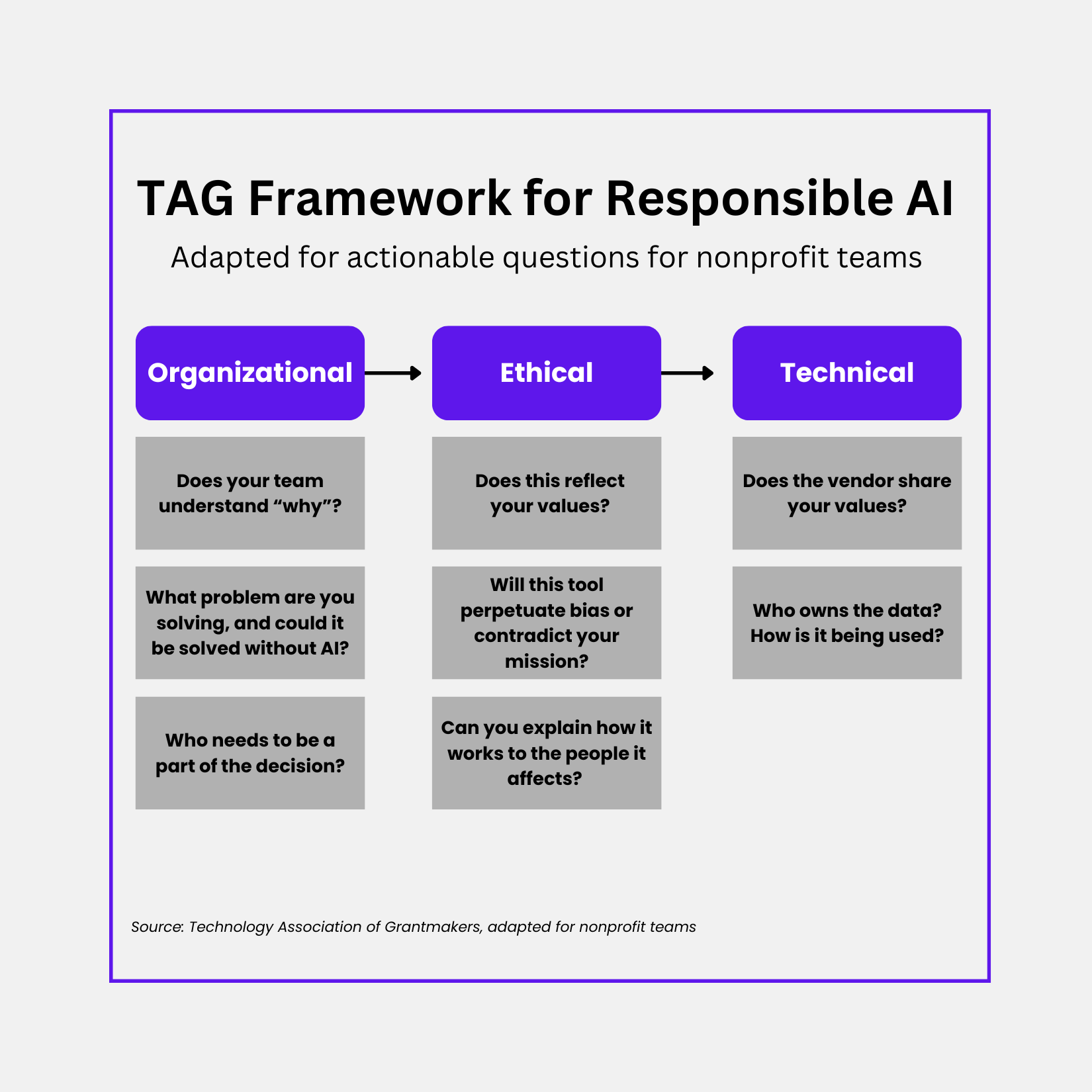

Nonprofit teams across the country are using a framework from the Technology Association of Grantmakers (TAG) to guide exactly these kinds of decisions. TAG developed a three-pillar approach for responsible AI adoption in philanthropy, informed by input from over 300 practitioners.

When I look at ASICS's story through this lens, their approach mirrors what TAG recommends. Here's how nonprofits can apply the same principles:

Technology Association of Grantmakers (TAG) AI Adoption Framework, adapted by Hello Impact for actional questions for nonprofit teams

PILLAR 1: ORGANIZATIONAL READINESS

Core Question: Does your team understand the "why"?

Before adopting any AI tool, ask:

What problem are we actually trying to solve?

Could this problem be solved without AI?

Who needs to be part of this decision? (Include program staff, the people you serve, and leadership, not just IT)

Do we have the capacity to implement and maintain this responsibly?

What ASICS showed us: They had organizational clarity around Vision2030, developed with input from employees across departments over nearly a year. When AI contradicted that vision, every stakeholder understood why it mattered.

PILLAR 2: ETHICAL ALIGNMENT

Core Question: Does this reflect our values?

Before implementing AI, ask:

Will this tool perpetuate bias or contradict our mission?

Can we explain how it works to the people it affects?

Does this represent the communities we serve with dignity?

If we hesitate to publish how we're using this tool, should we be using it at all?

What ASICS showed us: They researched how AI outputs made people feel and discovered it was demotivating and creating body insecurity. This contradicted their core value that movement is for everyone, so they acted to fix it.

PILLAR 3: TECHNICAL EVALUATION

Core Question: Is this the right tool?

Before committing to an AI vendor or platform, ask:

Does the vendor share our values, or are they just selling us a product?

Who owns the data we input? How is it being used?

What happens to our data if we stop using this tool?

Can we turn off or adjust the AI if it produces problematic outputs?

What ASICS showed us: They didn't just adopt off-the-shelf AI tools. They partnered with an AI expert who shared their values, built custom training datasets, and created a public mechanism for ongoing input (#TrainingAI). They maintained control over their values.

Why This Framework Matters for Your Organization

The principles behind ASICS's decisions are accessible to any organization:

→ Know your mission (Organizational)

→ Research whether AI contradicts it (Ethical)

→ Build or choose solutions that reflect your values (Technical)

TAG's framework gives nonprofits a systematic way to apply these principles to every AI decision, from choosing a chatbot for donor communications to using generative AI for grant writing to selecting image libraries for your website.

You don't need ASICS's budget. You need their clarity about what you stand for and a process to ensure your technology reflects it.

The Part Most People Miss

Here's what Alice made clear in her presentation: ASICS uses AI for personalization, content creation, and campaign optimization. They're not anti-AI. They're pro-discernment.

They use AI when it aligns with their caregiving identity. They don't use it when it contradicts their values or replaces human judgment about how to represent their community.

The real risk isn't that AI will replace creativity. It's that we'll let it replace discernment (the human judgment that keeps our work rooted in values).

That same filter applies to nonprofits. Before automating a process, generating content, or adopting a new tool, pause and ask:

→ Does this reflect our mission?

→ Does it represent the people we serve with dignity?

→ If we're unsure, who should we ask?

AI can scale your message. It can increase your efficiency. It can save your team time.

But it can't protect your integrity. That part is still up to us.

How to Apply This to Your Organization

Ready to put the TAG framework into practice? Here are your next steps:

Immediate Actions (This Week)

□ Audit your current AI tools against the three pillars. Are they organizationally sound, ethically aligned, and technically trustworthy?

□ Identify one area where AI might be contradicting your mission. (Common culprits: donor communications, image generation, automated responses)

□ Schedule a team discussion about your "AI values statement." What's non-negotiable? What would you refuse to automate?

Short-Term Actions (This Month)

□ Download TAG's full framework and review it with leadership and program staff

□ Explore ASICS's AI Training Programme if your organization uses AI-generated imagery

□ Create simple decision criteria for when to use AI vs. when to keep humans in the loop

Long-Term Actions (This Quarter)

□ Develop an AI implementation checklist based on the TAG framework

□ Train staff on how to evaluate AI tools through a mission lens

□ Build accountability into your AI adoption process (Who checks for bias? Who can raise concerns?)

Resources to Explore

TAG's Responsible AI Framework

The full framework document from Technology Association of Grantmakers

Download the framework (PDF)

ASICS AI Training Programme

Access their approach and learn about training AI on diverse representation

Visit ASICS AI Training Programme

ASICS New Personal Best Campaign

See how they redefined personal best around feeling, not performance

Explore New Personal Best

Hello Impact AI Consulting

Need help implementing the TAG framework at your organization? Let's talk.

Schedule a consultation

The Bottom Line

ASICS didn't win by adopting AI first. They won by knowing why they exist and training AI to reflect the communities they serve.

Your nonprofit doesn't need the biggest AI budget or the most sophisticated tools. You need clarity about your mission and the conviction to ensure your technology reflects it.

Start there. The rest will follow.

Let's Figure This Out Together

Have you seen a nonprofit or social enterprise use AI in a way that clearly reflects its mission? Or have you encountered an example where AI contradicted an organization's values?

Drop a comment below or email me at hello@helloimpact.org. I'm collecting case studies for an upcoming resource, and I'd love to feature organizations getting this right (or learning from getting it wrong).

Want more frameworks like this delivered to your inbox?

Join the Hello Impact newsletter for practical AI strategies, automation workflows, and mission-first technology insights designed specifically for nonprofit teams.

[NEWSLETTER SIGN-UP FORM]

About the Author

Shakura Conoly is the founder of Hello Impact, an AI consultancy focused on helping nonprofits implement technology solutions that save time without compromising mission. With over 20 years of experience in marketing, communications, and nonprofit leadership, she translates cutting-edge tools into solutions that work for resource-stretched teams. She currently serves as National Director of Community Partnerships at Inspiritus and Thrive Community Lending.

Connect with Shakura on LinkedIn or learn more at helloimpact.org.

Sources & References

-ASICS Corporation. (2020). "The Journey to Formulating VISION2030." Vision developed 2019-2020, announced November 2020. https://corp.asics.com/en/stories/project-story01 and https://corp.asics.com/en/press/article/2020-11-18

-Mind & ASICS. (2023). "New Personal Best Campaign." Research: 55% more likely to exercise seeing everyday people, 68% too embarrassed for gym, 78% don't find sports ads motivating. Tom Durnin featured as ambassador. https://www.mind.org.uk/news-campaigns/news/mind-asics-mark-world-mental-health-day-by-launching-new-personal-best-campaign/

-ASICS. (2023). "ASICS AI Training Programme." April 2023 launch. Research: 72% felt AI images unrealistic, 18% demotivated by AI images. Partnership with Omar Karim. https://www.asics.com/gb/en-gb/mk/trainingai and https://news-emea.asics.com/archive/asics-calls-out-ai-for--exercise-airbrushing--and-offers-ai-training-programme-to-teach-ai-the-trans/s/e94ca12a-9d3d-4112-af80-24e65f5633ac

-Contagious. (2025). "Personal Best: ASICS." Results since mission refocus including market share, brand health, and engagement lifts. https://www.contagious.com/iq/article/personal-best-asics

-Technology Association of Grantmakers (TAG) & Project Evident. (2023). "Responsible AI Adoption in Philanthropy: An Initial Framework for Grantmakers." Three-pillar framework (Organizational, Ethical, Technical) informed by 300+ practitioners. https://www.tagtech.org/wp-content/uploads/2024/01/AI-Framework-Guide-v1.pdf

Published: October 18, 2025

Reading time: 8 minutes